Crawlkit

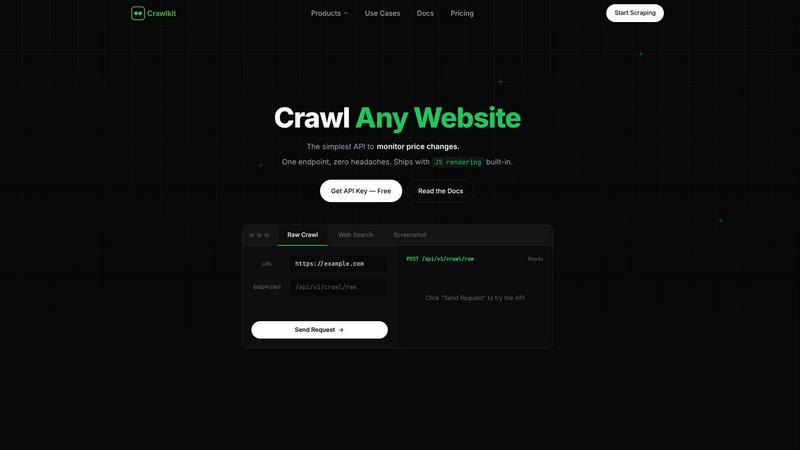

CrawlKit is an API-first platform for developers to effortlessly extract data and insights from any website.

Visit

CrawlKit is a cutting-edge web data extraction platform tailored for developers and data teams who require dependable and scalable access to web data without the burden of maintaining complex scraping infrastructure. In today's landscape, web scraping often involves numerous challenges such as dealing with rotating proxies, headless browsers, anti-bot protections, and rate limits. CrawlKit simplifies this process by automating the intricate aspects of web scraping. When you send a request to CrawlKit, it takes care of proxy rotation, browser rendering, retries, and overcoming blocking mechanisms. This allows users to concentrate on leveraging the data collected rather than worrying about how to gather it. With CrawlKit, users can extract various types of web data through a singular, user-friendly interface, including raw page content, search results, visual snapshots, and structured professional data from platforms like LinkedIn.

You may also like:

GrowPanel

Real-time MRR, churn, and LTV analytics for SaaS. Connect Stripe, Chargebee, or Recurly in one click. Free until $200k ARR.

GoViralTrend - Al TikTok Trend Finder & Script Generator

Discover exploding TikTok trends before they peak.