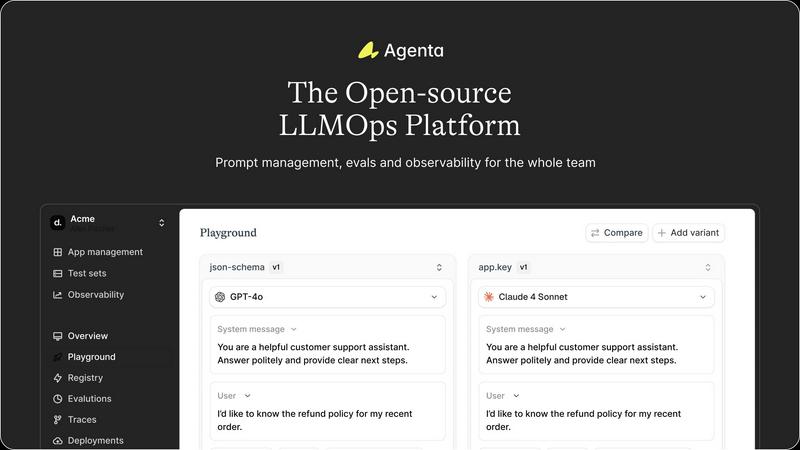

Agenta

Agenta is the open-source platform where teams collaborate to build and manage reliable LLM applications.

Visit

Agenta is the collaborative, open-source LLMOps platform designed to unify AI teams around the shared mission of building and shipping reliable LLM applications. It directly addresses the core challenges that slow down AI development: unpredictable model behavior, scattered workflows, and siloed teams. By bringing developers, product managers, and subject matter experts into a single, integrated environment, Agenta transforms chaotic, ad-hoc processes into a structured, evidence-based workflow. The platform serves as your team's single source of truth, centralizing the entire LLM development lifecycle—from initial prompt experimentation and rigorous evaluation to production observability and debugging. Its core value proposition is enabling seamless cooperation, allowing every team member to contribute their expertise safely, compare iterations systematically, and validate every change before it impacts users, ultimately fostering synergy to ship robust AI products faster and with greater confidence.

You may also like:

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams

My Deepseek API

Affordable, Reliable, Flexible - Deepseek API for All Your Needs