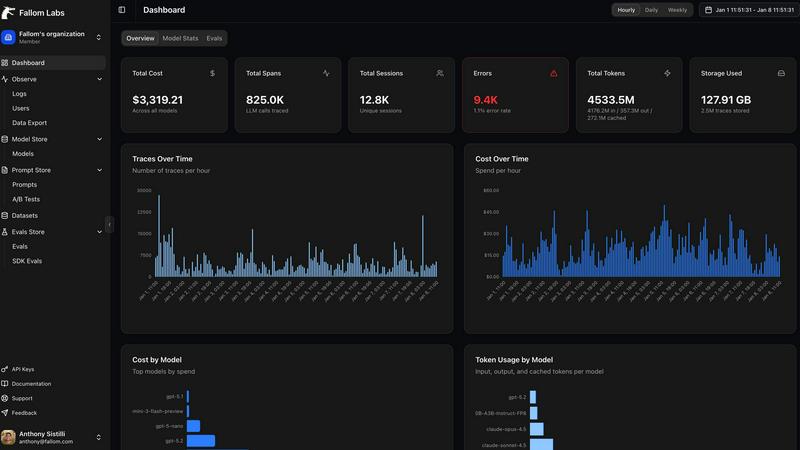

Fallom is the collaborative observability platform built for teams developing and operating AI applications. In the complex world of LLMs and AI agents, where a single user query can trigger a cascade of model calls, tool executions, and conditional logic, traditional monitoring falls short. Fallom provides the shared lens your engineering, product, and business teams need to see, understand, and optimize these workloads together. It delivers real-time, end-to-end tracing for every LLM interaction in production, capturing the full context—from the initial prompt and the model's output to every tool call, token usage, latency metric, and associated cost. This shared visibility transforms how teams collaborate on AI operations, enabling you to swiftly debug intricate agent failures, attribute spend accurately across projects, and ensure compliance with evolving regulations, all from a unified dashboard. By leveraging a single OpenTelemetry-native SDK, Fallom integrates seamlessly into your stack in minutes, fostering a cooperative environment where everyone has the contextual data needed to build reliable, efficient, and cost-effective AI experiences.

You may also like:

Pentest.fyi

Find and partner with the perfect penetration testing team for your security needs.

Blueberry

Blueberry is a Mac app that combines your editor, terminal, and browser in one workspace. Connect Claude, Codex, or any model and it sees everything.

Anti Tempmail

Transparent email intelligence verification API for Product, Growth, and Risk teams